Many folks have become accustomed to having the option to participate in meetings remotely. It can be a frustrating surprise to sit down to join that next meeting and find that it doesn’t have an online meeting link. (Ask me how I know.)

Similarly, it can be frustrating for a meeting organizer to have planned activities that require folks to be present in person and then have to pivot or reschedule when folks didn’t realize you were asking them to attend in person.

I have two quick tips:

1. ?Meeting organizers: call out when you expect in-person attendance.

New Outlook provides an easy option to flag a meeting as in-person:

![Image of an new meeting window in new Outlook, with the "In-person event" toggle turned on, that toggle highlighted in red with a red arrow pointing to the words "[in-person]" added to the meeting title.](https://gcd.w3.uvm.edu/files/2025/05/NewOutlook-Meeting-InPerson-700x413.png)

Choosing this option adds a bracketed label to the meeting title and also shows up in the location info in the meeting invite. (See below). If you aren’t using new Outlook, you can add the bracketed label to your meeting title manually. It also doesn’t hurt to add an explicit comment in the meeting invite like “please plan to attend in person.”

Additionally, don’t include a Teams meeting if you don’t plan to use it. That just sends mixed messages.

2. Meeting participants: actively review and respond to meeting requests

RSVPing to meeting requests is good netiquette. (I just dated myself, didn’t I.)

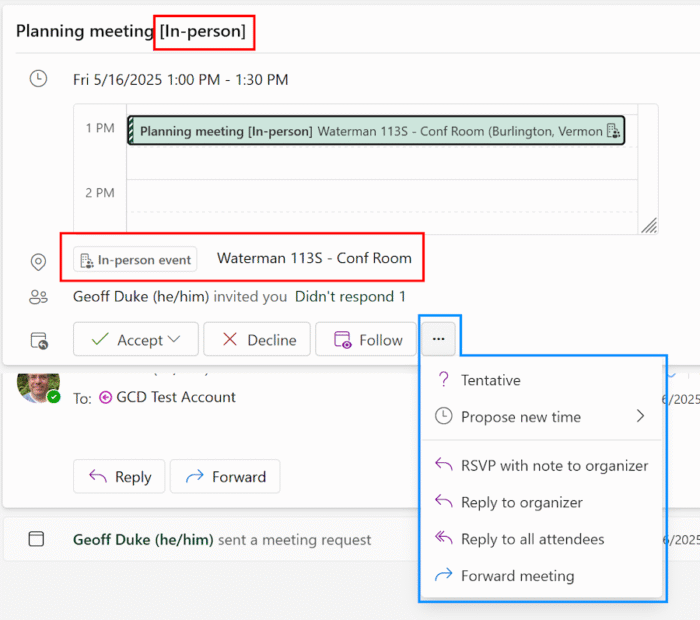

This gives you the chance to review the meeting for expectations, including whether the organizer requests in-person attendance. If the organizer used new Outlook and the handy in-person toggle shown above, you’ll see (in new Outlook) the in-person tag not only in the event title, but in the location as well:

Additionally, you can reply with more than just “Accept” or “Decline”. You can reply to the request and include a note, like “This is my usual work-from-home day. Do you need me in person?” or even “I appreciate being included. I’d to see an agenda to make sure I can contribute in a meaningful way.”

If you really prefer “Classic Outlook” or a different mail app altogether, you can access this functionality as needed with Outlook Online.

Bonus tip: set your work location and hours

You can set your work hours and work location in new Outlook by going to Settings ? Calendar ? Work hours and location. This information will help meeting organizers respect your schedule and telework plans. See Set your work hours and location in Outlook – Microsoft Support for specific instructions.

Wasn’t that fun? Or at least helpful? Please share this with others.

Happy Friday!